Live SEO case study : dealing with negative SEO attack

Instead of writing a normal SEO case study that showcases full story from start to finnish (usually leading to success), this is a live case SEO study on how I try to deal with negative SEO attack. What you are reading is happening live NOW. I have no way of knowing how long it takes for Google to respond to changes apart of personal estimate of 6-8 months. And I have no way of knowing what effect (if any) my counter actions will have, but since I am doing pretty much everything by the best practices I have no reason to expect anything else than positive results over time. Maybe the live format adds a bit of excitement to my story, maybe not. All in all I think this will become a very good example about the unpredictable timelines that come with SEO.

Recently one of the websites I own got hit by negative SEO. As most of my sites are filled with evergreen content, have got solid backlink profiles, and have been generating passive income for years and years, I do not put much focus to them apart of security maintenances and random UX improvements. For this particular site the cue of something unusual was an unexpected massive drop in traffic. After a quick review it became clear that the drop was because some outsider had created tens of thousands of spammy backlinks and injected tens of thousands of low quality pages into Google SERPs through querystring hack during a longer period of time. Once I knew what was happening I started doing immediate counter actions.

Dealing with injected content

My initial concern was of course the content injection - how had it happened and was it also affecting security of the site.

It turned out the attacker had utilized the very common functionality of injecting internal search term into results page content. My mistakes were threefold:1) I was not sanitizing incoming GET-parameters for known stopwords, 2) and each search result page created a new canonical URL for the query results page, 3) and returned search result pages were index, follow instead of noindex, nofollow.

Another hole the attacker utilized followed a similar characteristic - but with feeds.

When the attacker linked to search result pages with links like above, it created an opportunity for Google to discover a dynamic search result page or dynamic search feed page with piece of low quality spammy content for hungry bots to crawl and index. Ouch and sigh.

The downside of this kind of content injection is that site owner does not notice anything unless monitorings SERPS or Search Console tools daily for content that comes outside the submitted sitemap.

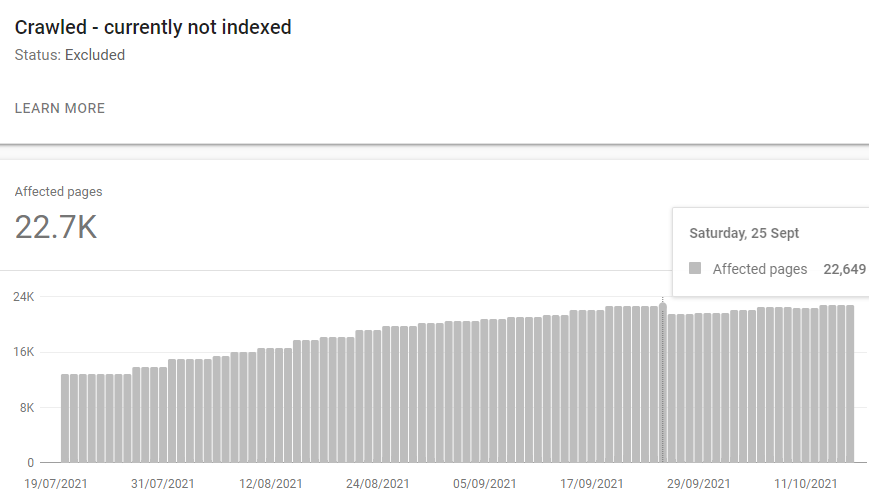

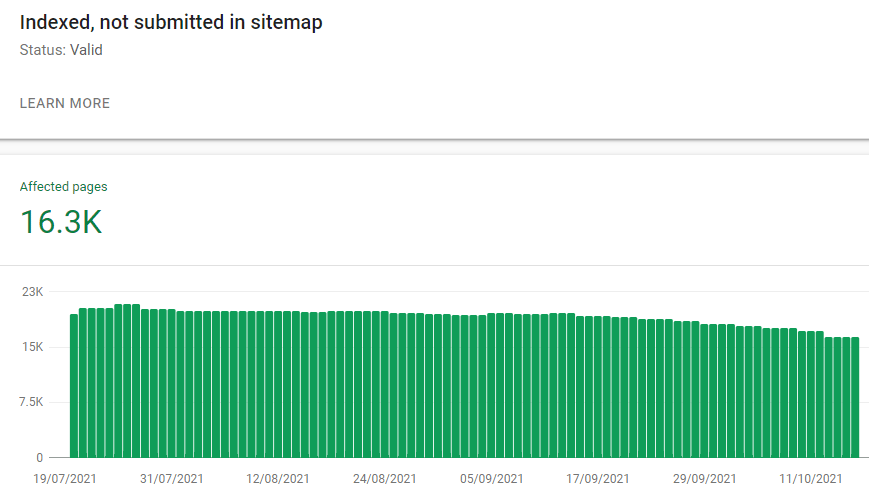

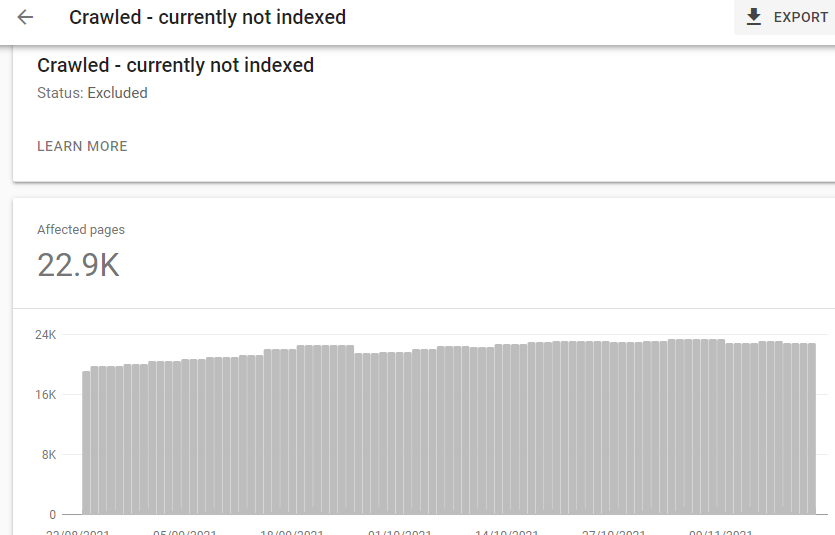

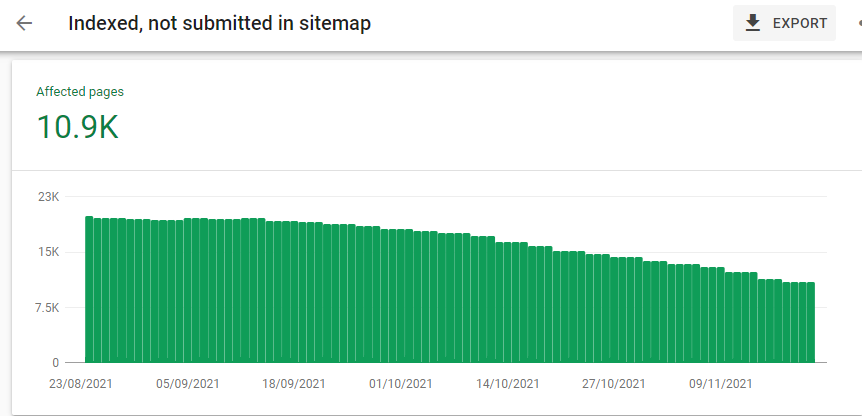

You would think that Google is smart enough to identify URLs like above as spam, but the contrary occurred. Nearly 23,000 pages as above do show in Google Search Console under Crawled, currently not indexed, and over 15,000 pages show as Indexed, not submitted in sitemap. And these numbers are already reduced by my defensive counter actions.

Once I knew what was happening, I started hardening the system.

My initial action was to add noindex,nofollow to be part of the affected pages output and then block the influenced feed URL patterns in robots.txt. The goal of this was to prevent further damage ASAP. These changes were implied on Sep 18th.

As neither of the above does not remove the injected content from Google's search index, my next step was filing content removal requests for specific URL patterns and segments. These requests were applied on Sep 21st.

And last but not least, on Sep 28th I placed some filtering rules on server configuration. If the URL or querystring contained any terms I considered part of attack, then the server would return HTTP-410/Gone. This should speed up the removal of these URLs. This was applied on Sep 28th.

Dealing with spammy backlinks

The way injected content got indexed in the first place was because the attacker had created tens of thousands low quality backlinks linking to URLs like showcased above. The positive side of attacker using spammy backlinks as part of his/her SEO attack is that most of the time such backlinks are very shortlived. They come from domains that were hacked, or that were bought and sold time and again, or they use querystring hacks very similar to what I was experiencing. So I know that the issue will fix itself over time.

The downside of all the above is that it might take more time than I prefer. So instead I chose to spent a couple of weekends by going through all the backlinks the site had, and identifying and weeding out the bad domains entirely. This resulted the disavow file growing from very moderate 25 domains to 255 domains. The new disavow list went live on Oct. 16th.

The results - Sep 18th 2021 to Oct 17th 2021

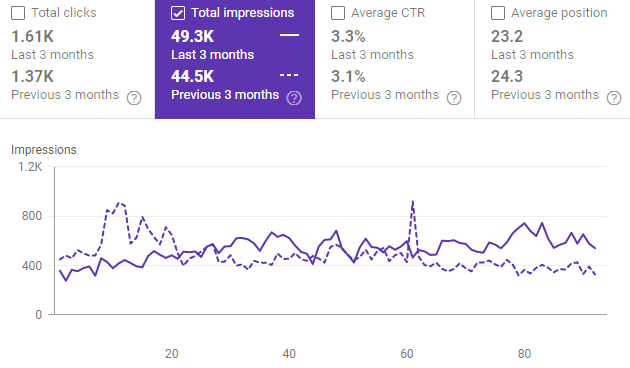

Clicks, Impressions, CTR and average position have remained somewhat unaffected. All are very, very low.

What I do notice is that the number of injected spammy content has begun dropping at increased pace. Before my initial changes Google was dropping approx. 400 pages of injected spammy content a month. Now the rate is approx. 3000 pages a month.

Interim update and some observations - Nov 8th 2021

Lately I've been thinking about how/why link spam attacks work so well for negative SEO. I think I have found the theoretic answer. I believe the secret lies in misusing crawl budget.

By default Google allocates a specific crawl quota for each site. When a not-so-nice SEO person creates spammy backlinks (with querystring spam etc) towards any site, the crawl budget that normally goes into crawling legit URLs starts becoming wasted. When the amount of spammy backlinks exceeds the number of valid URLs on the site by some x factor (that likely relates and defines crawl budget for the site on Google's end), that is when issues start to culminate. Google slows crawling the good URLs it should normally crawl, and puts more focus on the "discovered", possibly fresh URLs that the link spammer has created.

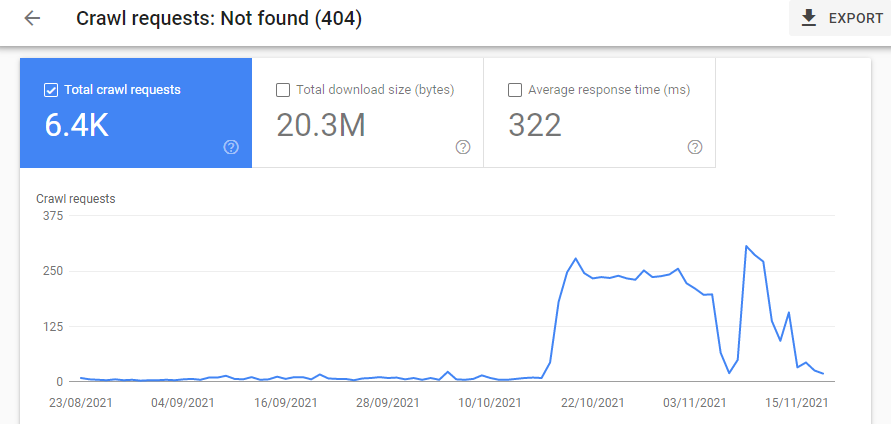

How did I come to this conclusion... By looking at GSC crawl stats, I can see that in the past week Googlebot has done only 600 "valid crawl requests" and closer to 3000 "spam related crawl requests" - and there is at least some 26K more spammy backlinks in the GSC crawl queu.

Or if saying this the other way... Right now at least 84% of the crawl budget that should have gone into crawling quality content has gone to kingdom come.. Gee, no wonder my site no longer ranked, LOL.

Depending on the angle you can look at this... .This is either a bug or feature in Google's system. Either way this might allow some serious misuse as Google is always trying to discover "fresh" content regardless of the source. How to solve this... I think the only way to solve this would be to give site owners a possibility to choose whether or not their crawl budget quota should be put primarily on URLs within the sitemap.

Update - Nov 21st 2021

As expected, Google Core update happened this month and there has been no significant change with the site's rankings nor traffic levels. All in all the timeline for possible changes is still way too early.

The good news is that I have got loads of updated statistics to share, and pretty much all the information confirms what I know about Google's and Googlebot's behaviour.

The crawled, currently not indexed is firmly stuck on roughly the same level as month back. All in all this is no surprise as Google has got a very long memory, and it stores data for months, possibly years for every link and page it encounters (especially those it has deemed not worthy of indexing). I have no doubts that over time this figure will begin dropping.

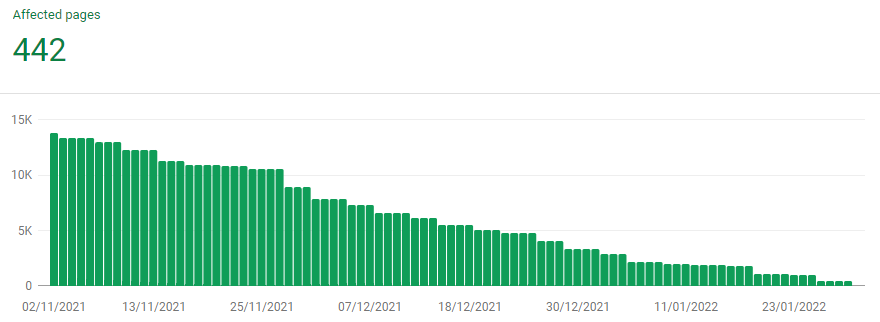

On the positive side, the Indexed, not submitted in Sitemap figure is dropping at very fast pace. It is only 10.9K, meaning a drop of over 6K URLs in just one month.

Another figure that has started to drop is number of Internal Links Google recognizes. It has dropped with over 100K, and is currently 783K.

One more interesting chart I want to share is how Google is treating the 410 pages. Based on what Google is telling (by John Mueller), Google should learn relatively quickly when a specific URL pattern is returning something else than the standard HTTP200/OK. But at least for now Googlebot seems to be still in "learning mode".

Interim update - Dec 27th 2021

December 2021 has been a somewhat interesting month for most doing SEO for living. As I expected, the December Google updates had zero affect with my case study site. On the positive side, all the "website health statistics" that I am tracking are progressing to right direction.

The count of Crawled currently not indexed pages seems to have passed the peakpoint. The figure of 21.3K marks a healthy 7% decrease and the lowest value in 3 months. It is gonna be a long (at least 6, likely 8-10 months) ride before it reaches "somewhat normal" levels, but likely (and hopefully) the worst is now behind.

Indexed not submitted in sitemap keeps on dropping at increasing pace. The count has progressed from starting point of 16.3K to 10.9K and is now 4.8K. Very likely by February 2022 it will reach the "zero-point".

And since internal links are somewhat essential in how search engines "understand" a website, it is very nice to see that also the number of Internal links keeps getting back to more normal. When I started writing this study, the count was closer to 900K links. Then it dropped to 783K, and is now slightly below 600K. So another good 20% decrease there. But as the number is still significantly off, and will likely take months and months to fix.

If looking at performance data.... Not much happening. Click data shows a somewhat stable line (no gains but also no losses). Impressions and average position are both heading south whereas CTR has gone up - this is mostly about seasonal flux. What else can one say that occasionally fixing SEO issues requires lots and lots of patience. But one tiny step at a time.

Update - Jan 31st 2022

Till so far I have not written much about performance metrics, mostly because there has not been much to talk about because of flat line. But finally it seems that something is about to change. During the past month the number of clicks has increased by 20% compared to average of past three months. And other vitals show positive development too. Time will tell whether this sticks, but so far so good.

Health statistics of the site have gone through quite a change. Crawled currently not indexed is down to 19.9K (decrease of yet another 10%). Interestingly, the same day this statistics dropped heavily, the same day Google traffic took a jump upwards. Indexed not submitted in sitemap has been following my prediction, and is some 400+ pages apart from reaching zero value.

If the current positive trend keeps up for next 2-3 months, then I will move to next recovery step of warming up the site with some new high quality content and fresh backlinks. So some interesting times ahead.

To be continued....

Next update will happen sometime in late February or early March 2022.

Like this article?

Good, then my job is done ;) Feel free to give me some backlink love in any way you want to. Or share this article on social media.

Want to follow when more tips like this come out?

Great, let's connect with LinkedIn. I will post an update whenever something new comes out.