Crawl Request Breakdown - the unsung hero of onsite SEO

Google Search Console (aka. GSC)

has evolved a lot over the years, and of the newer features is called Crawl Request Breakdown.

And no reason to panic if you have not seen or heard about it before. This feature is hidden somewhat well and is buried deep under the Settings > Crawling > Crawl Report when logged in on GSC.

Crawl Request Breakdown is an excellent broken resource finder with two unique twists compared to every other tool out there.

First, because the tool is by Google, this is really what GoogleBot is seeing and failing with. No other tool can provide this data.

And second, unlike most link topology tool out there, Crawl Request Breakdown gives you access to real historical data. Why this matters is that often when a SEO starts working with a site, all we have on our hands is the current version of the site. Unfortunately SEO is not just about present moment, but also what happened weeks or months ago may affects months and months into future.

So what this tool is all about?

Though this is basically just a dynamic technical delivery report, it is also a very powerful UX tool. And as most of those SEO know, UX is very much in the core of SEO these days.

If the user downloads 100 resources - be it a page with images, scripts, styles, fonts, json, xml, whatever - and say 10% of those requests fail, how do you think you are doing? Often time the site builders can get 'lazy' and skip petty fixes unless they create major issues either with the user side or server performance. But here's a question - how does Google know and define that the stuff that is not working is petty? Imagine if the postman was supposed to delivery 100 packages onto your door, but you received only 90. If such a "package loss" happened in real life, you would likely be filling complaints about the postman. Right here your site is the postman, and Google (and everyone using Google to discover your site) are your customers.

Where to draw the line between healthy and unhealthy site?

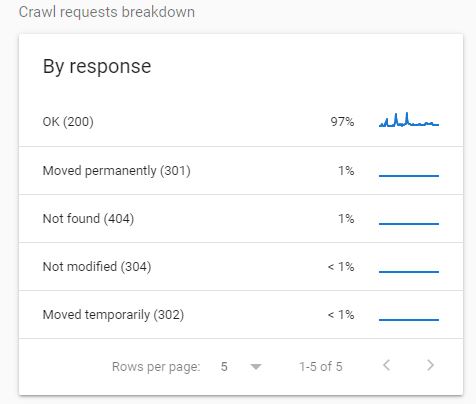

If the website is healthy, then Crawl Request Breakdown looks something like below:

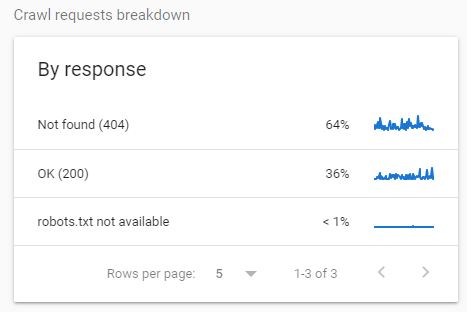

OTOH, if the website is in unhealthy state, the Crawl Request Breakdown provides totally different set of statistics.

Based on my personal experience I would say that if the HTTP/200 (OK) section goes below 95%, then the website is entering dangerous waters. If going back to my earlier comparison of real world mail, for example Finnish Post fails to deliver approx. 3% of parcels on annual level, and yet people complain a lot about delivery issues. I guess it says everything necessary.

Walk before you run

One of the funny sides of SEO is that it does not have to be rocket science every time. If I received a dime every time a website that was experiencing hard time in SERPs did not have issues with internal linkage, then I would be a rich man. So the lesson for the week is walk before you run - built a rock solid foundation first, and only when you managing the internal structure of your own website, start focusing on other issues.

Like this article?

Good, then my job is done ;) Feel free to give me some backlink love in any way you want to.

Want to follow when more tips like this come out?

Great, let's connect with LinkedIn. I will post an update whenever something new comes out.